At its heart, multimodal learning is all about teaching AI to understand the world a lot more like we do—by using multiple senses at once. Instead of just reading text, a multimodal AI can process images, audio, and video all at the same time to get a much richer, more complete picture of what's going on.

So, What Is Multimodal Learning, Really?

Think about learning a new recipe. You probably wouldn't just read the instructions. You might also watch a cooking tutorial to see the technique and listen for the sound of food sizzling in the pan to know it’s cooking right. Each piece of information—or modality—adds a new layer to your understanding.

This is exactly what multimodal learning gives to an AI. An AI that only understands text might process a transcript about a dog barking, but it has no real clue what that sounds or looks like. A multimodal system, on the other hand, can actually connect the words "dog barking" with an audio clip of a bark and a photo of a dog.

Why One Data Type Just Isn't Enough

Sticking to a single stream of information gives an AI major blind spots. It’s like trying to understand a situation with one eye closed and both ears covered. You only get one piece of the puzzle, and you miss all the important context.

- Text-only AI: It can read just fine, but it completely misses out on visual cues, the tone of someone's voice, or background sounds.

- Image-only AI: It can identify objects in a picture but has no clue about the story or conversation happening around them.

- Audio-only AI: It can pick up on sounds but lacks the visual context to know where they're coming from or what’s causing them.

This is exactly why combining data types is such a big deal. It helps AI graduate from a narrow, one-dimensional view to a much more robust and human-like perception of the world.

By mixing different data types, a multimodal AI develops a deeper, more contextual understanding. It’s the difference between reading a sheet of music and actually hearing the full orchestra perform.

To make these ideas a bit more concrete, let's break down the core concepts of multimodal learning compared to the traditional, one-track approach.

Key Concepts in Multimodal Learning at a Glance

| Concept | Simple Explanation | Why It Matters |

|---|---|---|

| Modality | Just a fancy word for a type of data, like text, audio, or an image. | Combining modalities creates a richer understanding than any single one can on its own. |

| Unimodal Learning | AI learning from just one type of data (e.g., text only). | It's limited and lacks real-world context, kind of like reading a book with no pictures. |

| Multimodal Learning | AI learning from multiple data types at the same time. | This approach is way more like how humans learn, allowing for deeper insights and better conclusions. |

| Data Fusion | The techy process of blending information from different modalities. | This is the "how" behind multimodal AI; it’s where the magic of combining data actually happens. |

As you can see, the shift from unimodal to multimodal is all about adding depth and context, moving AI from a specialized tool to a more versatile and intelligent partner.

A Rapidly Growing Field

While the idea of learning from multiple sources isn't exactly new, recent tech breakthroughs have really pushed it into the spotlight. Interest in multimodal AI has been climbing since the mid-90s, but the last few years have seen a massive spike in academic attention.

Between 2021 and 2022 alone, the number of research papers published on the topic jumped from 77 to 106. According to research covered on Nature.com, this surge is partly thanks to better tech and the global shift toward remote learning. This intense focus shows just how crucial this multi-sensory approach is for building smarter AI that can interact with the world in a more meaningful way.

The Different Flavors of AI Data

In multimodal learning, data is the main ingredient, and it comes in a lot of different "flavors." To build an AI that truly understands the world, we have to feed it the same kinds of information we use every day. Think of these data types, or modalities, as the AI’s senses.

Let’s take a quick tour of the most common data types that power today’s smartest AI systems. You’ll probably recognize most of them from your daily life, since they're the digital building blocks of how we communicate and experience the world.

The Core Four: AI's Digital Senses

These are the big ones, the data types you interact with constantly. They form the foundation of most multimodal systems because they capture so much of how we share information.

- Text: This is the most direct one. It’s the words on this page, your social media posts, text messages, and product reviews. Text is structured, clear, and packed with obvious info.

- Images: A picture really is worth a thousand words. Photos on Instagram, medical X-rays, and satellite imagery all fall into this category. Images provide crucial visual context that text alone can never quite capture.

- Audio: This covers everything you can hear—from Spotify playlists and the voice notes you send friends to the specific sound of an engine in a diagnostic recording. Audio carries emotional tone, environmental clues, and of course, spoken language.

- Video: Video is a powerhouse because it’s already multimodal. It combines images and audio over time. Your TikTok feed, a security camera recording, and a Zoom meeting are all perfect examples of video data in action.

While these four are the most common, they're really just the beginning. The magic of multimodal learning comes from its ability to weave in more specialized, and sometimes less obvious, forms of data to get an even clearer picture of reality.

Beyond the Basics: Specialized Data Types

To solve truly complex problems, AI often needs to look beyond standard media. This is where specialized data types come in, giving AI a much deeper, more technical understanding of its environment.

A fantastic example is 3D data. Self-driving cars, like those from Waymo, don't just "see" with cameras. They use LiDAR and other sensors to build a constantly updating 3D map of the world around them. This data gives them a precise spatial awareness that’s impossible to get from a flat 2D image. It's the difference between looking at a photo of a street and having a full, interactive map of it.

By combining traditional sensor data with the extensive world knowledge from large language models, AI systems gain a more nuanced understanding of complex real-world scenarios.

Then you have tabular data, which is basically anything you’d find in a spreadsheet. This includes sales figures, customer records, and scientific measurements. When you combine a patient's medical scan (image) with their lab results (tabular data), an AI can make a much more informed diagnosis.

Finally, there’s a whole universe of sensory data. This category includes info from highly specific sensors, like temperature readings from a weather station, motion data from a smartwatch, or pressure readings from industrial machinery. Each one provides a very precise, measurable piece of the puzzle.

By pulling from this incredible variety of information, a multimodal system becomes far more versatile and context-aware, moving one step closer to a truly comprehensive understanding of the world.

How AI Combines Different Data Streams

So, how does an AI actually make sense of all this different information at once? It’s not as simple as just throwing text, images, and audio files into a digital blender and hitting "start." There's a surprisingly cool process happening under the hood, and you don’t need a Ph.D. in computer science to get the gist of it.

Let's use an analogy. Think of the AI as a detective working on a complex case. This detective gets a file filled with different kinds of evidence: a witness statement (that's our text), a grainy security camera clip (video), and a recording of a crucial phone call (audio).

No single piece of evidence tells the whole story. The detective's real skill is in pulling the most important details from each one and then weaving them together to figure out what actually happened.

Extracting the Important Clues

The first thing our AI detective does is something called feature extraction. This is where the model meticulously sifts through each data stream to find the most meaningful bits of information—the clues. It’s a lot like our human detective taking a highlighter to a transcript or circling a face in a photograph.

- For an image: The AI might identify objects, faces, specific colors, and how everything is arranged in the scene.

- For an audio clip: It could pick out specific words, but also listen for the speaker's tone of voice—are they happy, angry, or sarcastic? It also notes any background noises.

- For a text document: It's looking for keywords, sentence structure, and the underlying emotion or sentiment.

This step basically translates all the raw data into a compact, numerical language the AI can work with. It's how the model zeroes in on what's important without getting bogged down by useless details.

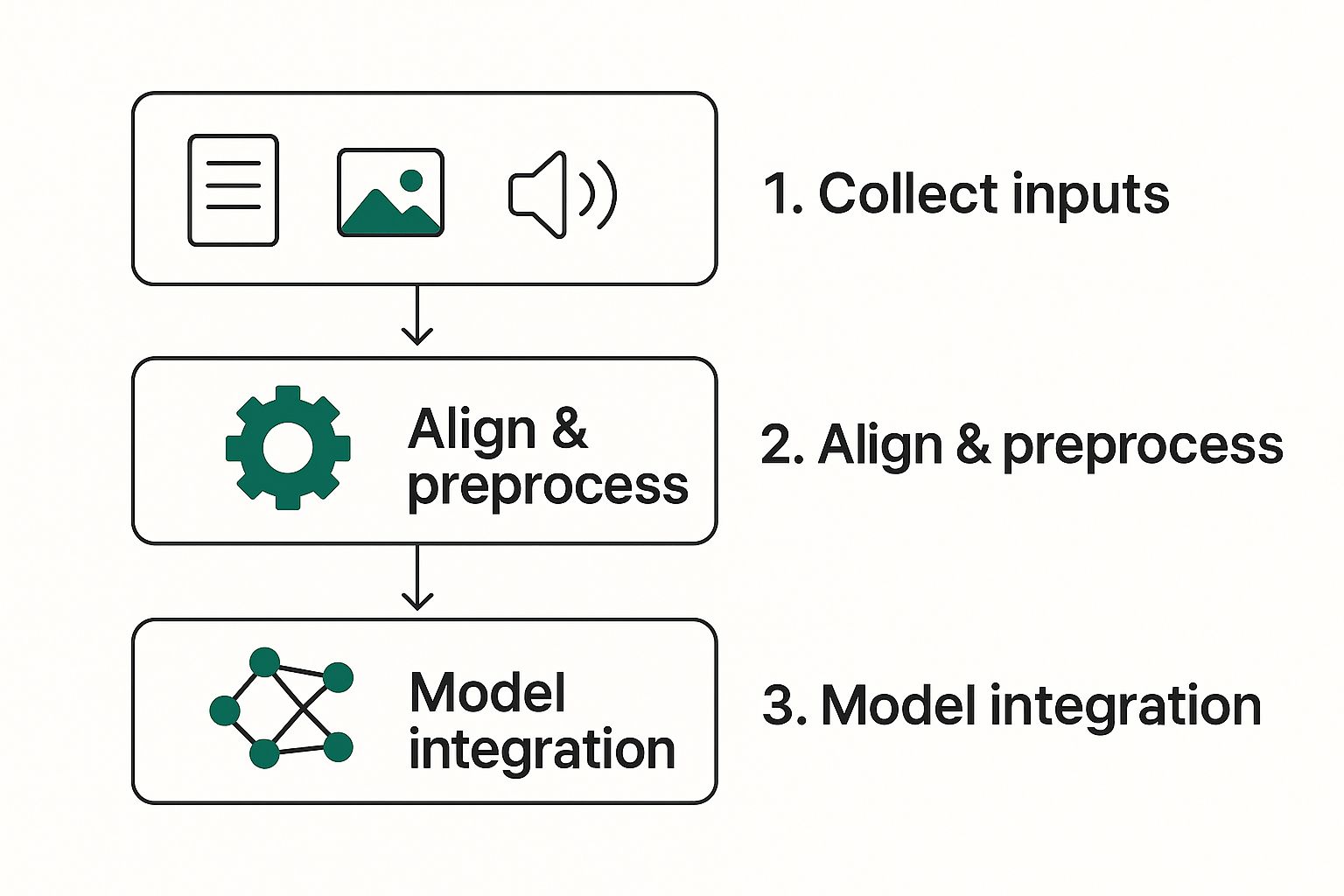

Here’s a look at the basic workflow for combining these different data types in a multimodal system.

As you can see, the process starts by gathering different inputs, then mashes them together into a single, unified model that can make an intelligent decision.

Fusing the Evidence Together

After the AI has pulled out the key features from each data stream, it's time for the real magic: fusion. This is the art of blending these different pieces of information into a single, coherent picture. This is the moment our detective spreads the highlighted testimony, the important video frames, and the key audio snippets across the desk and starts connecting the dots.

Now, there isn't just one way to do this. AI engineers have a few different strategies for fusing data, and the one they choose depends on what they're trying to do.

- Early Fusion: This is like mixing all your ingredients together in a bowl right at the start. The AI combines the features from all modalities early on and processes them as one big chunk of information.

- Late Fusion: This is more like cooking different parts of a meal separately and only putting them together on the plate. Here, the AI analyzes each data stream on its own first, then merges the final results to make a decision.

- Hybrid Fusion: You guessed it—this is a mix of both. It can involve multiple stages of fusion, which allows for much more complex and subtle interactions between the different data streams.

The goal of fusion is to create a combined understanding that is far more robust and accurate than what any single modality could provide alone. For example, if a video shows a person smiling but the text says, "I'm so angry," a well-fused model can correctly spot the sarcasm.

The engine driving this whole intricate process is usually a complex architecture known as a neural network. These are algorithms inspired by the human brain that are exceptionally good at finding hidden patterns and relationships in data. If you're curious about the mechanics, you can get a clearer picture by demystifying neural networks in our beginner's guide. Understanding how these networks work really shines a light on what makes multimodal learning so powerful.

Seeing Multimodal AI in Your Daily Life

All this talk about data fusion and AI detectives can feel a bit abstract. But the truth is, you're already using multimodal learning systems every single day. The concept really clicks when you see it in action, often working quietly behind the scenes to make your favorite apps and gadgets feel so intuitive.

Many of these applications are so well-integrated into our lives that we don’t even notice the complex data blending that makes them possible. From simple conveniences to world-changing technologies, multimodal AI is no longer the stuff of science fiction; it’s a real, practical part of our world.

The Smartphone in Your Pocket

Your phone is the perfect place to start. Just think about your photo gallery. When you search for "dog," your phone instantly digs up every picture of your furry friend. That’s not magic—it's multimodal learning at its best. The system connects your text input ("dog") with the visual data in your images to understand the request.

The same thing happens with your smart assistant. Ask it, "What does the Eiffel Tower look like?" and it processes your audio command, figures out the question, and then fetches visual results—images and maps—to give you a complete answer. It’s a seamless blend of voice, text, and image data.

The Future of Transportation

Beyond your personal gadgets, multimodal AI is the engine driving some of today's biggest technological leaps, especially in self-driving cars. Autonomous vehicles need an incredibly sophisticated sense of their surroundings, and they get it by processing a constant stream of information from multiple sources at once.

A self-driving car’s system is constantly combining data from:

- Cameras (Image/Video): To "see" the road, read traffic signs, and spot pedestrians.

- LiDAR (3D Data): To build a precise, three-dimensional map of its environment, measuring distances with laser accuracy.

- GPS (Positional Data): To know its exact location and follow the planned route.

- Radar (Sensory Data): To track the speed and distance of other cars, even in rain or fog.

By fusing all these streams together, the car builds a comprehensive, real-time picture that's far more reliable than what any single sensor could provide. It's this layered perception that allows it to make safe, split-second decisions.

Breakthroughs in Healthcare and Creativity

The impact of multimodal learning is also making waves in critical fields like medicine and even the creative arts. In healthcare, AI is changing how doctors diagnose diseases. By analyzing a patient's medical scans (images) alongside their written clinical notes (text) and lab results (tabular data), AI models can spot subtle patterns a human might miss, leading to earlier and more accurate diagnoses.

This technology isn’t just about processing data; it’s about creating a deeper, more contextual understanding that can lead to life-saving insights.

This idea of mixing data types isn't brand new. The roots of multimodal learning trace back to the late 20th century with the rise of multimedia software that combined text, graphics, and audio for education. What started as a way to make learning more engaging has evolved into the complex neural networks we see today. You can get a great overview of this evolution from Deepgram's AI glossary.

On the creative side, you've probably seen the explosion of AI-powered art and video tools. When you give a tool like Midjourney a text prompt—say, "a futuristic city at sunset in a synthwave style"—it translates your text description into a stunning, detailed image. That’s a direct application of multimodal learning, building a bridge between language and visual art.

This same principle is what allows creators to turn written articles into engaging audio, which is completely changing how we learn and consume information. The rise of AI-powered podcasts that are changing education is a perfect example of this in action, making knowledge more accessible than ever.

The Real Benefits of a Multi-Layered Approach

So, why go to all the trouble of teaching an AI to process different kinds of data at once? The payoff is huge. When you move from a single data stream to a multi-layered approach, you give an AI a much more robust and flexible way to see the world. The benefits aren't just technical—they lead directly to smarter, safer, and more capable tools.

Let's break down the three main advantages that make multimodal learning such a big deal for the future of intelligent systems.

Deeper Understanding and Better Accuracy

First and foremost, a multi-layered approach leads to a much deeper and more accurate understanding. Think of an AI trying to identify a dog. If it only has an image to work with, it might get confused if the picture is blurry or taken from a weird angle.

But what if that same AI can also hear the sound of a bark and read a caption that says, "Here's a photo of my Golden Retriever"? Suddenly, its confidence skyrockets. Each piece of data backs up the others, correcting potential mistakes and filling in the gaps.

This layered analysis helps eliminate ambiguity and leads to far more reliable conclusions—something that’s absolutely critical for everything from medical diagnostics to self-driving cars.

Grasping True Human Context

The world is messy and complicated. We humans rely on context, nuance, and non-verbal cues to communicate, and this is where single-modality AI often falls flat. A text-only model simply can't detect the sarcasm in someone's voice or the real meaning behind a facial expression.

Multimodal systems, however, can. By combining what is said (audio), how it's said (tone), and the body language that goes with it (video), the AI gets the full picture. It can finally begin to understand intent and emotion in a way that feels much more human.

This ability to grasp context is the key to creating AI that can interact with us naturally, from smarter customer service bots to more empathetic digital assistants.

Unlocking Brand New Capabilities

Perhaps the most exciting benefit is that multimodal learning unlocks entirely new possibilities. It's the engine behind the creative AI tools that have captured everyone's imagination.

- Text-to-Video Generation: Tools like OpenAI's Sora can take a simple text prompt and generate a high-quality video clip from scratch.

- Audio Enhancement: AI can analyze a silent film (video) and compose a fitting soundtrack (audio) to match the on-screen action and emotion.

These creative leaps are only possible because the AI can translate concepts from one modality to another—a skill that has massive implications. For instance, this same technology can help you turn a detailed article into a podcast, making your content accessible to a whole new audience.

The evidence for this multi-sensory approach is compelling. Research synthesizing multiple studies found that students showed significant learning gains when instruction combined visuals with narration or interaction. A review covering nearly 6,000 students confirmed that well-designed multimodal strategies enhance higher-order skills like problem-solving and application. You can explore more about these findings on curriculumredesign.org.

This just goes to show that learning—whether for humans or machines—is simply better when more senses are involved.

To put it all in perspective, let's look at a direct comparison of what AI can do with one sense versus multiple senses.

Single-Modality vs. Multimodal Learning Benefits

| Feature | Single-Modality AI (e.g., Text-Only) | Multimodal AI (e.g., Text + Image + Audio) |

|---|---|---|

| Contextual Understanding | Limited to the literal meaning of words; misses sarcasm and emotional cues. | Understands tone, facial expressions, and body language for a complete picture. |

| Accuracy & Reliability | Can be easily fooled by ambiguous or poor-quality data (e.g., a blurry photo). | Cross-references multiple data types to confirm information and reduce errors. |

| Problem-Solving | Solves problems within its own domain (e.g., summarizing an article). | Can connect ideas across different domains (e.g., generating an image from a story). |

| Human Interaction | Interactions can feel robotic and lack nuance, often leading to frustration. | Enables more natural, empathetic, and intuitive conversations with users. |

| Creative Potential | Limited to manipulating a single data type (e.g., rewriting text). | Capable of true cross-modal creation, like generating video from text or music from images. |

As you can see, the jump from single-modality to multimodal isn't just a small step up—it’s a fundamental shift in what AI is capable of achieving.

Your Questions About Multimodal Learning, Answered

Still got a few questions? Good. That means you're thinking critically about this. Let's walk through some of the most common questions that pop up when people first start exploring multimodal learning. This should clear up any final fuzzy spots.

We'll cover a few foundational ideas, some practical distinctions, and the real-world hurdles that researchers are working on right now.

Is This a New AI Concept?

Not really, but its recent explosion in capability definitely is. The basic idea of combining different types of data—like text and images—has been around since the early days of multimedia. What’s changed is the sheer power we now have to process it all.

Think of it this way: we’ve always had the ingredients for a complex meal (text, images, audio), but we've only just built an oven powerful enough to cook them together into something truly amazing. Recent breakthroughs in deep learning and computing are that oven. It's why models like GPT-4o have gone from a niche research topic to a technology many of us use daily.

What's the Difference Between Multimodal and Multi-Task Learning?

This is a fantastic question, and it's easy to get them mixed up because the names sound so alike. The simplest way to keep them straight is to focus on inputs vs. outputs.

Multimodal learning is about understanding one thing from different types of input data. Think of watching a movie clip and reading its subtitles to understand the scene.

Multi-task learning is about training a model to do several different output jobs from a single type of input. For instance, feeding it a paragraph of text and asking it to both translate it and summarize it.

So, one is about combining senses to get a complete picture, while the other is about being a skilled jack-of-all-trades.

What Are the Biggest Challenges in Multimodal Learning?

While the potential is enormous, making it work seamlessly is tough. Researchers are constantly bumping up against a few key challenges that make building these complex systems a serious engineering puzzle.

The three main hurdles are:

- Data Alignment: This is a huge one. It’s all about teaching the AI to correctly link different streams of data. For example, it has to learn that the sound of a bark belongs to the image of a dog, not a picture of a car. It's about connecting the right dots.

- Data Fusion: Once the data is aligned, how do you actually blend it together? This is the technical challenge of figuring out the best way to merge information from different sources to get the most accurate result. It's a surprisingly complex problem.

- Massive Datasets: You can't build a powerful multimodal AI without gigantic, high-quality datasets. And these aren't just any datasets—they need to contain multiple, related data types. Compiling and cleaning this much information is an incredibly expensive and time-consuming job.

Solving these problems is what will unlock the next wave of even more capable and intuitive AI.

Ready to put multimodal content to work for your own audience? With podcast-generator.ai, you can instantly turn your written content into engaging, professional-sounding audio. Give your blog posts and reports a voice, and offer your audience a new way to connect with your ideas.